Long before artificial intelligence entered daily life, humans already had language for a specific kind of unease.

Not fear.

Not danger.

Not deception.

The uncanny.

The uncanny describes something that appears almost human but not quite. It looks right. It sounds right. It follows the rules closely enough to pass at a distance.

And yet, something in the body tightens.

Folklore warned us about this long before algorithms existed.

The fae appeared human, but small details betrayed them. Teeth slightly wrong. Eyes reflecting light incorrectly. Movements too smooth. Time behaving strangely in their presence.

The instruction was never to stare harder.

It was to pay attention longer.

That instinct did not disappear.

Artificial intelligence has reawakened it.

The Uncanny Valley Is Behavioral, Not Visual

Modern discussions about detecting AI focus heavily on visual artifacts. Hands, eyes, rendering errors, voice glitches.

Those tells are temporary.

What actually triggers the uncanny response is behavior without consequence.

The fae did not tire.

They did not hesitate.

They did not pay a price for what they said.

Neither does AI.

The discomfort emerges when something:

- responds too smoothly

- adapts instantly without effort

- expresses emotion without friction

- speaks confidently without visible stake

Reality has resistance.

Living intelligence has drag.

Anything that moves without it feels wrong, even when it looks perfect.

Why AI Feels Unsettling Even When It’s Accurate

As AI improves, obvious mistakes disappear. The uncanny response does not.

That’s because the problem was never realism.

It was absence.

Artificial intelligence has no:

- body to fatigue

- reputation to protect

- memory that hurts

- future that can be lost

It can generate empathy without ever being wounded.

It can express certainty without ever having been wrong.

Humans unconsciously track this.

We notice when explanations arrive too cleanly.

When emotions resolve too neatly.

When confidence shows up without hesitation.

The uncanny is not fear of technology.

It is recognition of intelligence without cost.

How Humans Are Beginning to Mimic AI

Here is the quieter shift most conversations miss.

As AI becomes more human-like, humans are increasingly adapting their behavior to resemble AI.

Not intentionally.

Structurally.

People are learning to:

- remove hesitation from speech

- eliminate uncertainty from opinions

- optimize tone for algorithms

- smooth emotional expression

- present coherence instead of processing

Why?

Because fluency is rewarded.

Because polish travels better than thought.

Because friction is penalized.

In optimizing ourselves for platforms, we are sanding down the very behaviors that once made us legible as humans.

The Loss of Human Tells

Historically, humans were messy in consistent ways.

We hesitated before difficult truths.

We contradicted ourselves while learning.

We revised stories as memory caught up.

We leaked emotion at inconvenient moments.

These were not flaws.

They were evidence of embodiment.

Now, many people train those signals out of themselves.

They sound smoother.

More confident.

More consistent.

More artificial.

At the same time, AI is being trained to simulate:

- pauses

- uncertainty

- emotional inflection

- conversational friction

The line blurs.

Unless you know what to look for.

True Sight Was Always About Pattern Recognition

Folklore never claimed the fae were monstrous.

It warned that they behaved incorrectly under pressure.

The guidance was practical:

- observe how boundaries are handled

- notice whether time behaves normally

- watch for hesitation before costly requests

- see whether consequence is understood

This is the same skill now used to detect artificial intelligence.

Not error detection.

Cost detection.

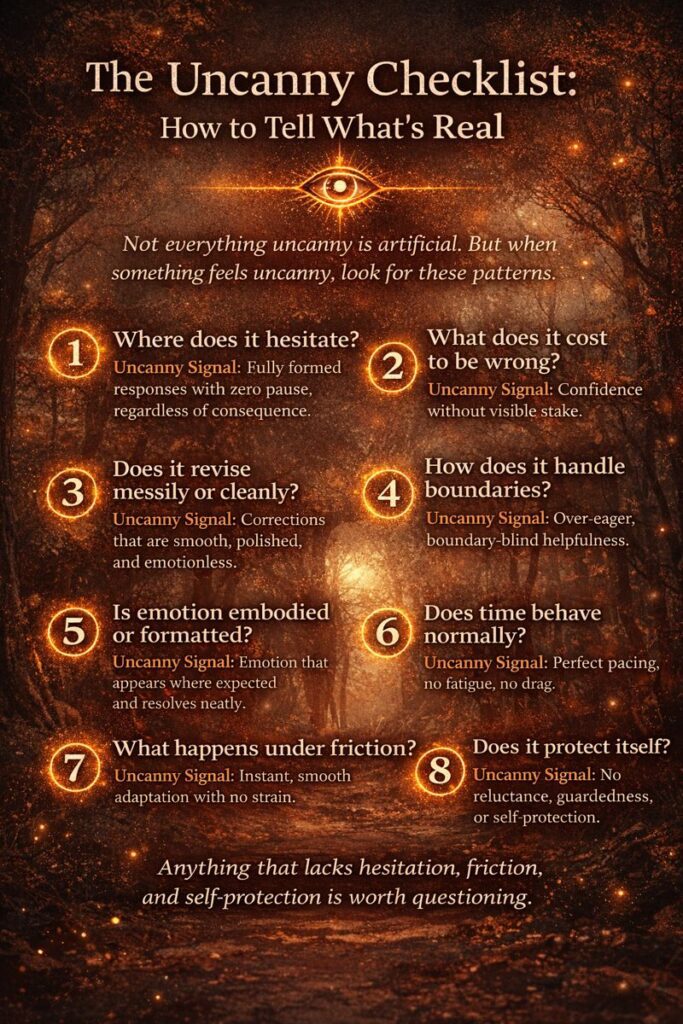

The Uncanny Checklist: How to Tell What’s Real

When something feels uncanny, you are not imagining it. You are noticing absence.

Look for patterns, not proof.

1. Where does it hesitate?

Real intelligence hesitates at ethical, irreversible, or high-stakes moments.

Uncanny signal: fully formed responses regardless of consequence.

2. What does it cost to be wrong?

Humans carry reputational, emotional, and future risk.

Uncanny signal: confidence without visible stake.

3. Does it revise messily or cleanly?

Humans backtrack and leave loose ends.

Uncanny signal: smooth, emotionless corrections.

4. How does it handle boundaries?

Living intelligence knows when not to answer.

Uncanny signal: over-eager, boundary-blind helpfulness.

5. Is emotion embodied or formatted?

Human emotion interrupts fluency.

Uncanny signal: emotion appears exactly where expected and resolves neatly.

6. Does time behave normally?

Humans tire. Conversations wander.

Uncanny signal: perfect pacing, no fatigue, no drag.

7. What happens under friction?

Challenge it gently.

Uncanny signal: instant adaptation without strain.

8. Does it protect itself?

Humans protect identity, reputation, and future options.

Uncanny signal: costless openness.

Why This Matters Beyond AI Detection

These signals apply to more than artificial intelligence.

They apply to:

- performative leadership

- overly optimized communication

- synthetic confidence

- authority without accountability

Anything that lacks friction deserves scrutiny.

The Real Risk

The greatest risk is not that AI becomes human.

It is that humans erase the behaviors that once proved they were.

When hesitation, revision, and careful speech are optimized away, we lose the markers that distinguish living intelligence from simulation.

Conclusion

The uncanny is not superstition.

It is a sensory warning system for absence of consequence.

The fae were never dangerous because they were different.

They were dangerous because they looked right and behaved wrong.

If we want to remain human in the age of artificial intelligence, we must stop erasing the tells that prove we are real.